<< 1.1 Introduction to Systems of Linear Equations | 1.3 Matrices and Matrix Operations >>

Definition: Row echelon form (REF)

Definition

Row echelon form is defined as the augmented matrix of a linear system with the following properties:

- If a row does not consist entirely of zeros, then the first nonzero number in the row is a 1. We call this a leading 1.

- If there are any rows that consist entirely of zeros, then they are grouped together at the bottom of the matrix.

- In any two successive rows that do not consist entirely of zeros, the leading 1 in the lower row occurs farther to the right than the leading 1 in the higher row.

Definition: Reduced row echelon form (RREF)

Definition

Reduced row echelon form is defined as a row echelon form with the following additional property:

- Each column that contains a leading 1 has zeros everywhere else in that column.

Remark: Facts about echelon forms

- Every matrix has unique RREF

- REF are not unique

- RREF, and all REF have the same number of zero rows, and the leading 1’s always occur in the same positions. These positions are called the pivot positions. A column that contains a pivot position is called a pivot column.

For example, consider the following augmented matrix:

The leading 1’s always occur in positions (row, column): (1,1), (2,3), and (3, 5). These are the pivot positions. The pivot columns are columns 1, 3, and 5.

Definition: General solution

Definition

Let : Linear system that has infinitely many solutions.

Then general solution of is set of parametric equations from which all solutions can be obtained.

Definition: Gauss-Jordan elimination

Theorem

Gauss-Jordan elimination is the procedure of using elementary row operation to transform an augmented matrix to reduced row echelon form.

This procedure consists of:

- Forward phase: Zeros are introduced below the leading 1’s

- Backward phase: Zeros are introduced above the leading 1’s

Definition: Gaussian elimination

Definition

Gaussian elimination is the procedure of using elementary row operation to transform an augmented matrix to row echelon form.

This procedure consists of:

- Forward phase: Zeros are introduced below the leading 1’s

- Back-substitution: Starting from the last equation, solve for variables by substituting known values into previous equations

Definition: Homogeneous linear system

Definition

Homogeneous linear system is defined as a linear system with , for all .

It is written as $ \begin{matrix} a_{11}x_{1} & + & a_{12}x_{2} & + & \dots & + & a_{1n}x_{n} & = & 0 \ a_{21}x_{1} & + & a_{22}x_{2} & + & \dots & + & a_{2n}x_{n} & = & 0 \ \vdots & & \vdots & && & \vdots & & \vdots \ a_{m1}x_{1} & + & a_{m2}x_{2} & + & \dots & + & a_{mn}x_{n} & = & 0 \ \end{matrix} $$

Definition: Trivial solution

Definition

Trivial solution is defined as solution of a homogeneous linear system with all that is all . Other solutions are called nontrivial solution.

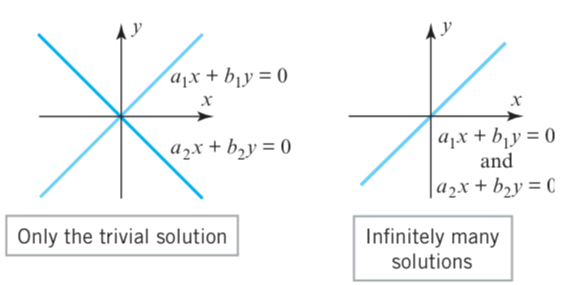

Theorem: Solution of homogeneous linear system

Every homogeneous linear system has a trivial solution, and thus consistent. As a corollary of that, there are only two possibilities for its solutions:

- The system has only the trivial solution

- The system has infinitely many solutions, in addition to the trivial solution

Theorem 1.2.1: Free variable for homogeneous system

Let : Homogeneous linear system with unknowns

If the reduced row echelon form of has nonzero rows

Then has free variables

Recall the properties of RREF. As a result,

- Each of nonzero row hold 1 leading 1

- Each leading 1 take up 1 column

Thus, any remaining “free” columns, which is columns taken up by leading 1’s are free variables.

Theorem 1.2.2

A homogeneous linear system with more unknowns than equations has infinitely many solutions.

Consider a matrix with equations (row) in unknowns (column), and . Because there are more columns than nonzero rows, there are simply not enough nonzero rows to “take up” the columns. Therefore, there are always at least free variables remaining.

More formally,

Proof

Consider with equations and unknowns, where .

In RREF, the number of nonzero rows (can’t exceed the number of equations).

By Theorem 1.2.1, there are free variables. Since , we have

Therefore, there is at least one free variable, giving infinitely many solutions